|

Nektar++

|

|

Nektar++

|

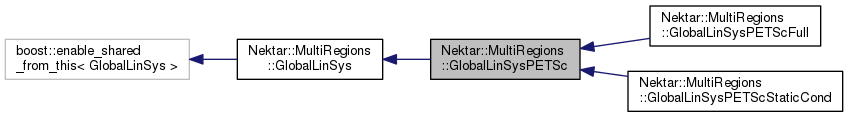

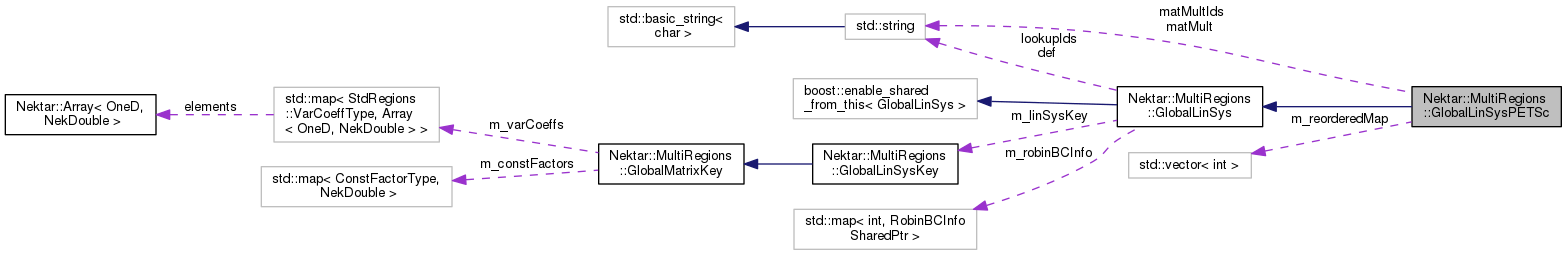

A PETSc global linear system. More...

#include <GlobalLinSysPETSc.h>

Classes | |

| struct | ShellCtx |

| Internal struct for MatShell and PCShell calls to store current context for callback. More... | |

Public Member Functions | |

| GlobalLinSysPETSc (const GlobalLinSysKey &pKey, const boost::weak_ptr< ExpList > &pExp, const boost::shared_ptr< AssemblyMap > &pLocToGloMap) | |

| Constructor for full direct matrix solve. More... | |

| virtual | ~GlobalLinSysPETSc () |

| Clean up PETSc objects. More... | |

| virtual void | v_SolveLinearSystem (const int pNumRows, const Array< OneD, const NekDouble > &pInput, Array< OneD, NekDouble > &pOutput, const AssemblyMapSharedPtr &locToGloMap, const int pNumDir) |

| Solve linear system using PETSc. More... | |

Public Member Functions inherited from Nektar::MultiRegions::GlobalLinSys Public Member Functions inherited from Nektar::MultiRegions::GlobalLinSys | |

| GlobalLinSys (const GlobalLinSysKey &pKey, const boost::weak_ptr< ExpList > &pExpList, const boost::shared_ptr< AssemblyMap > &pLocToGloMap) | |

| Constructor for full direct matrix solve. More... | |

| virtual | ~GlobalLinSys () |

| const GlobalLinSysKey & | GetKey (void) const |

| Returns the key associated with the system. More... | |

| const boost::weak_ptr< ExpList > & | GetLocMat (void) const |

| void | InitObject () |

| void | Initialise (const boost::shared_ptr< AssemblyMap > &pLocToGloMap) |

| void | Solve (const Array< OneD, const NekDouble > &in, Array< OneD, NekDouble > &out, const AssemblyMapSharedPtr &locToGloMap, const Array< OneD, const NekDouble > &dirForcing=NullNekDouble1DArray) |

| Solve the linear system for given input and output vectors using a specified local to global map. More... | |

| boost::shared_ptr< GlobalLinSys > | GetSharedThisPtr () |

| Returns a shared pointer to the current object. More... | |

| int | GetNumBlocks () |

| DNekScalMatSharedPtr | GetBlock (unsigned int n) |

| DNekScalBlkMatSharedPtr | GetStaticCondBlock (unsigned int n) |

| void | DropStaticCondBlock (unsigned int n) |

| void | SolveLinearSystem (const int pNumRows, const Array< OneD, const NekDouble > &pInput, Array< OneD, NekDouble > &pOutput, const AssemblyMapSharedPtr &locToGloMap, const int pNumDir=0) |

| Solve the linear system for given input and output vectors. More... | |

Protected Member Functions | |

| void | SetUpScatter () |

| Set up PETSc local (equivalent to Nektar++ global) and global (equivalent to universal) scatter maps. More... | |

| void | SetUpMatVec (int nGlobal, int nDir) |

| Construct PETSc matrix and vector handles. More... | |

| void | SetUpSolver (NekDouble tolerance) |

| Set up KSP solver object. More... | |

| void | CalculateReordering (const Array< OneD, const int > &glo2uniMap, const Array< OneD, const int > &glo2unique, const AssemblyMapSharedPtr &pLocToGloMap) |

| Calculate a reordering of universal IDs for PETSc. More... | |

| virtual void | v_DoMatrixMultiply (const Array< OneD, const NekDouble > &pInput, Array< OneD, NekDouble > &pOutput)=0 |

Protected Member Functions inherited from Nektar::MultiRegions::GlobalLinSys Protected Member Functions inherited from Nektar::MultiRegions::GlobalLinSys | |

| virtual int | v_GetNumBlocks () |

| Get the number of blocks in this system. More... | |

| virtual DNekScalMatSharedPtr | v_GetBlock (unsigned int n) |

| Retrieves the block matrix from n-th expansion using the matrix key provided by the m_linSysKey. More... | |

| virtual DNekScalBlkMatSharedPtr | v_GetStaticCondBlock (unsigned int n) |

| Retrieves a the static condensation block matrices from n-th expansion using the matrix key provided by the m_linSysKey. More... | |

| virtual void | v_DropStaticCondBlock (unsigned int n) |

| Releases the static condensation block matrices from NekManager of n-th expansion using the matrix key provided by the m_linSysKey. More... | |

| PreconditionerSharedPtr | CreatePrecon (AssemblyMapSharedPtr asmMap) |

| Create a preconditioner object from the parameters defined in the supplied assembly map. More... | |

Protected Attributes | |

| Mat | m_matrix |

| PETSc matrix object. More... | |

| Vec | m_x |

| PETSc vector objects used for local storage. More... | |

| Vec | m_b |

| Vec | m_locVec |

| KSP | m_ksp |

| KSP object that represents solver system. More... | |

| PC | m_pc |

| PCShell for preconditioner. More... | |

| PETScMatMult | m_matMult |

| Enumerator to select matrix multiplication type. More... | |

| std::vector< int > | m_reorderedMap |

| Reordering that takes universal IDs to a unique row in the PETSc matrix. More... | |

| VecScatter | m_ctx |

| PETSc scatter context that takes us between Nektar++ global ordering and PETSc vector ordering. More... | |

| int | m_nLocal |

| Number of unique degrees of freedom on this process. More... | |

| PreconditionerSharedPtr | m_precon |

Protected Attributes inherited from Nektar::MultiRegions::GlobalLinSys Protected Attributes inherited from Nektar::MultiRegions::GlobalLinSys | |

| const GlobalLinSysKey | m_linSysKey |

| Key associated with this linear system. More... | |

| const boost::weak_ptr< ExpList > | m_expList |

| Local Matrix System. More... | |

| const std::map< int, RobinBCInfoSharedPtr > | m_robinBCInfo |

| Robin boundary info. More... | |

| bool | m_verbose |

Static Private Member Functions | |

| static PetscErrorCode | DoMatrixMultiply (Mat M, Vec in, Vec out) |

| Perform matrix multiplication using Nektar++ routines. More... | |

| static PetscErrorCode | DoPreconditioner (PC pc, Vec in, Vec out) |

| Apply preconditioning using Nektar++ routines. More... | |

| static void | DoNekppOperation (Vec &in, Vec &out, ShellCtx *ctx, bool precon) |

| Perform either matrix multiplication or preconditioning using Nektar++ routines. More... | |

| static PetscErrorCode | DoDestroyMatCtx (Mat M) |

| Destroy matrix shell context object. More... | |

| static PetscErrorCode | DoDestroyPCCtx (PC pc) |

| Destroy preconditioner context object. More... | |

Static Private Attributes | |

| static std::string | matMult |

| static std::string | matMultIds [] |

A PETSc global linear system.

Solves a linear system using PETSc.

Solves a linear system using single- or multi-level static condensation.

Definition at line 59 of file GlobalLinSysPETSc.h.

| Nektar::MultiRegions::GlobalLinSysPETSc::GlobalLinSysPETSc | ( | const GlobalLinSysKey & | pKey, |

| const boost::weak_ptr< ExpList > & | pExp, | ||

| const boost::shared_ptr< AssemblyMap > & | pLocToGloMap | ||

| ) |

Constructor for full direct matrix solve.

Definition at line 65 of file GlobalLinSysPETSc.cpp.

References Nektar::LibUtilities::CommMpi::GetComm(), Nektar::MultiRegions::GlobalLinSys::m_expList, m_matMult, and m_matrix.

|

virtual |

Clean up PETSc objects.

Note that if SessionReader::Finalize is called before the end of the program, PETSc may have been finalized already, at which point we cannot deallocate our objects. If that's the case we do nothing and let the kernel clear up after us.

Definition at line 110 of file GlobalLinSysPETSc.cpp.

References m_b, m_ksp, m_locVec, m_matrix, m_pc, and m_x.

|

protected |

Calculate a reordering of universal IDs for PETSc.

PETSc requires a unique, contiguous index of all global and universal degrees of freedom which represents its position inside the matrix. Presently Gs does not guarantee this, so this routine constructs a new universal mapping.

| glo2uniMap | Global to universal map |

| glo2unique | Global to unique map |

| pLocToGloMap | Assembly map for this system |

Definition at line 235 of file GlobalLinSysPETSc.cpp.

References ASSERTL0, Nektar::MultiRegions::GlobalLinSys::m_expList, m_nLocal, m_reorderedMap, Nektar::LibUtilities::ReduceSum, and Vmath::Vsum().

Referenced by Nektar::MultiRegions::GlobalLinSysPETScFull::GlobalLinSysPETScFull(), and Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement().

|

staticprivate |

Destroy matrix shell context object.

Note the matrix shell and preconditioner share a common context so this might have already been deallocated below, in which case we do nothing.

| M | Matrix shell object |

Definition at line 486 of file GlobalLinSysPETSc.cpp.

Referenced by SetUpMatVec().

|

staticprivate |

Destroy preconditioner context object.

Note the matrix shell and preconditioner share a common context so this might have already been deallocated above, in which case we do nothing.

| pc | Preconditioner object |

Definition at line 504 of file GlobalLinSysPETSc.cpp.

Referenced by SetUpMatVec().

|

staticprivate |

Perform matrix multiplication using Nektar++ routines.

This static function uses Nektar++ routines to calculate the matrix-vector product of M with in, storing the output in out.

| M | Original MatShell matrix, which stores the ShellCtx object. |

| in | Input vector. |

| out | Output vector. |

Definition at line 438 of file GlobalLinSysPETSc.cpp.

References DoNekppOperation().

Referenced by SetUpMatVec().

|

staticprivate |

Perform either matrix multiplication or preconditioning using Nektar++ routines.

This static function uses Nektar++ routines to calculate the matrix-vector product of M with in, storing the output in out.

| in | Input vector. |

| out | Output vector. |

| ctx | ShellCtx object that points to our instance of GlobalLinSysPETSc. |

| precon | If true, we apply a preconditioner, if false, we perform a matrix multiplication. |

Definition at line 384 of file GlobalLinSysPETSc.cpp.

References Nektar::MultiRegions::GlobalLinSysPETSc::ShellCtx::linSys, m_ctx, m_locVec, m_precon, m_reorderedMap, Nektar::MultiRegions::GlobalLinSysPETSc::ShellCtx::nDir, Nektar::MultiRegions::GlobalLinSysPETSc::ShellCtx::nGlobal, v_DoMatrixMultiply(), and Vmath::Vcopy().

Referenced by DoMatrixMultiply(), and DoPreconditioner().

|

staticprivate |

Apply preconditioning using Nektar++ routines.

This static function uses Nektar++ routines to apply the preconditioner stored in GlobalLinSysPETSc::m_precon from the context of pc to the vector in, storing the output in out.

| pc | Preconditioner object that stores the ShellCtx. |

| in | Input vector. |

| out | Output vector. |

Definition at line 463 of file GlobalLinSysPETSc.cpp.

References DoNekppOperation().

Referenced by SetUpMatVec().

|

protected |

Construct PETSc matrix and vector handles.

| nGlobal | Number of global degrees of freedom in the system (on this processor) |

| nDir | Number of Dirichlet degrees of freedom (on this processor). |

Definition at line 312 of file GlobalLinSysPETSc.cpp.

References DoDestroyMatCtx(), DoDestroyPCCtx(), DoMatrixMultiply(), DoPreconditioner(), Nektar::MultiRegions::ePETScMatMultShell, Nektar::MultiRegions::GlobalLinSysPETSc::ShellCtx::linSys, m_b, m_matMult, m_matrix, m_nLocal, m_pc, m_x, Nektar::MultiRegions::GlobalLinSysPETSc::ShellCtx::nDir, and Nektar::MultiRegions::GlobalLinSysPETSc::ShellCtx::nGlobal.

Referenced by Nektar::MultiRegions::GlobalLinSysPETScFull::GlobalLinSysPETScFull(), and Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement().

|

protected |

Set up PETSc local (equivalent to Nektar++ global) and global (equivalent to universal) scatter maps.

These maps are used in GlobalLinSysPETSc::v_SolveLinearSystem to scatter the solution vector back to each process.

Definition at line 200 of file GlobalLinSysPETSc.cpp.

References m_ctx, m_locVec, m_reorderedMap, and m_x.

Referenced by Nektar::MultiRegions::GlobalLinSysPETScFull::GlobalLinSysPETScFull(), and Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement().

Set up KSP solver object.

This is reasonably generic setup – most solver types can be changed using the .petscrc file.

| tolerance | Residual tolerance to converge to. |

Definition at line 521 of file GlobalLinSysPETSc.cpp.

References Nektar::MultiRegions::ePETScMatMultShell, m_ksp, m_matMult, m_matrix, and m_pc.

Referenced by Nektar::MultiRegions::GlobalLinSysPETScFull::GlobalLinSysPETScFull(), and Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement().

|

protectedpure virtual |

Implemented in Nektar::MultiRegions::GlobalLinSysPETScStaticCond, and Nektar::MultiRegions::GlobalLinSysPETScFull.

Referenced by DoNekppOperation().

|

virtual |

Solve linear system using PETSc.

The general strategy being a PETSc solve is to:

Implements Nektar::MultiRegions::GlobalLinSys.

Definition at line 147 of file GlobalLinSysPETSc.cpp.

References ASSERTL0, Nektar::MultiRegions::GlobalLinSys::CreatePrecon(), Nektar::MultiRegions::ePETScMatMultShell, m_b, m_ctx, m_ksp, m_locVec, m_matMult, m_precon, m_reorderedMap, m_x, and Vmath::Vcopy().

|

protected |

Definition at line 81 of file GlobalLinSysPETSc.h.

Referenced by SetUpMatVec(), v_SolveLinearSystem(), and ~GlobalLinSysPETSc().

|

protected |

PETSc scatter context that takes us between Nektar++ global ordering and PETSc vector ordering.

Definition at line 93 of file GlobalLinSysPETSc.h.

Referenced by DoNekppOperation(), SetUpScatter(), and v_SolveLinearSystem().

|

protected |

KSP object that represents solver system.

Definition at line 83 of file GlobalLinSysPETSc.h.

Referenced by SetUpSolver(), v_SolveLinearSystem(), and ~GlobalLinSysPETSc().

|

protected |

Definition at line 81 of file GlobalLinSysPETSc.h.

Referenced by DoNekppOperation(), SetUpScatter(), v_SolveLinearSystem(), and ~GlobalLinSysPETSc().

|

protected |

Enumerator to select matrix multiplication type.

Definition at line 87 of file GlobalLinSysPETSc.h.

Referenced by GlobalLinSysPETSc(), SetUpMatVec(), SetUpSolver(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), and v_SolveLinearSystem().

|

protected |

PETSc matrix object.

Definition at line 79 of file GlobalLinSysPETSc.h.

Referenced by GlobalLinSysPETSc(), Nektar::MultiRegions::GlobalLinSysPETScFull::GlobalLinSysPETScFull(), SetUpMatVec(), SetUpSolver(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), and ~GlobalLinSysPETSc().

|

protected |

Number of unique degrees of freedom on this process.

Definition at line 95 of file GlobalLinSysPETSc.h.

Referenced by CalculateReordering(), and SetUpMatVec().

|

protected |

PCShell for preconditioner.

Definition at line 85 of file GlobalLinSysPETSc.h.

Referenced by SetUpMatVec(), SetUpSolver(), and ~GlobalLinSysPETSc().

|

protected |

Definition at line 97 of file GlobalLinSysPETSc.h.

Referenced by DoNekppOperation(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::GlobalLinSysPETScStaticCond(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_BasisInvTransform(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_BasisTransform(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_InitObject(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_PreSolve(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_Recurse(), and v_SolveLinearSystem().

|

protected |

Reordering that takes universal IDs to a unique row in the PETSc matrix.

Definition at line 90 of file GlobalLinSysPETSc.h.

Referenced by CalculateReordering(), DoNekppOperation(), Nektar::MultiRegions::GlobalLinSysPETScFull::GlobalLinSysPETScFull(), SetUpScatter(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), and v_SolveLinearSystem().

|

protected |

PETSc vector objects used for local storage.

Definition at line 81 of file GlobalLinSysPETSc.h.

Referenced by SetUpMatVec(), SetUpScatter(), v_SolveLinearSystem(), and ~GlobalLinSysPETSc().

|

staticprivate |

Definition at line 134 of file GlobalLinSysPETSc.h.

|

staticprivate |

Definition at line 135 of file GlobalLinSysPETSc.h.

1.8.8

1.8.8