|

Nektar++

|

|

Nektar++

|

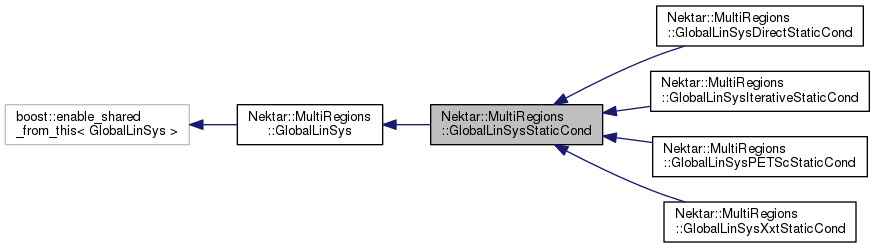

A global linear system. More...

#include <GlobalLinSysStaticCond.h>

Public Member Functions | |

| GlobalLinSysStaticCond (const GlobalLinSysKey &mkey, const boost::weak_ptr< ExpList > &pExpList, const boost::shared_ptr< AssemblyMap > &locToGloMap) | |

| Constructor for full direct matrix solve. More... | |

| virtual | ~GlobalLinSysStaticCond () |

Public Member Functions inherited from Nektar::MultiRegions::GlobalLinSys Public Member Functions inherited from Nektar::MultiRegions::GlobalLinSys | |

| GlobalLinSys (const GlobalLinSysKey &pKey, const boost::weak_ptr< ExpList > &pExpList, const boost::shared_ptr< AssemblyMap > &pLocToGloMap) | |

| Constructor for full direct matrix solve. More... | |

| virtual | ~GlobalLinSys () |

| const GlobalLinSysKey & | GetKey (void) const |

| Returns the key associated with the system. More... | |

| const boost::weak_ptr< ExpList > & | GetLocMat (void) const |

| void | InitObject () |

| void | Initialise (const boost::shared_ptr< AssemblyMap > &pLocToGloMap) |

| void | Solve (const Array< OneD, const NekDouble > &in, Array< OneD, NekDouble > &out, const AssemblyMapSharedPtr &locToGloMap, const Array< OneD, const NekDouble > &dirForcing=NullNekDouble1DArray) |

| Solve the linear system for given input and output vectors using a specified local to global map. More... | |

| boost::shared_ptr< GlobalLinSys > | GetSharedThisPtr () |

| Returns a shared pointer to the current object. More... | |

| int | GetNumBlocks () |

| DNekScalMatSharedPtr | GetBlock (unsigned int n) |

| DNekScalBlkMatSharedPtr | GetStaticCondBlock (unsigned int n) |

| void | DropStaticCondBlock (unsigned int n) |

| void | SolveLinearSystem (const int pNumRows, const Array< OneD, const NekDouble > &pInput, Array< OneD, NekDouble > &pOutput, const AssemblyMapSharedPtr &locToGloMap, const int pNumDir=0) |

| Solve the linear system for given input and output vectors. More... | |

Protected Member Functions | |

| virtual DNekScalBlkMatSharedPtr | v_PreSolve (int scLevel, NekVector< NekDouble > &F_GlobBnd) |

| virtual void | v_BasisTransform (Array< OneD, NekDouble > &pInOut, int offset) |

| virtual void | v_BasisInvTransform (Array< OneD, NekDouble > &pInOut) |

| virtual void | v_AssembleSchurComplement (boost::shared_ptr< AssemblyMap > pLoctoGloMap) |

| virtual int | v_GetNumBlocks () |

| Get the number of blocks in this system. More... | |

| virtual GlobalLinSysStaticCondSharedPtr | v_Recurse (const GlobalLinSysKey &mkey, const boost::weak_ptr< ExpList > &pExpList, const DNekScalBlkMatSharedPtr pSchurCompl, const DNekScalBlkMatSharedPtr pBinvD, const DNekScalBlkMatSharedPtr pC, const DNekScalBlkMatSharedPtr pInvD, const boost::shared_ptr< AssemblyMap > &locToGloMap)=0 |

| virtual void | v_Solve (const Array< OneD, const NekDouble > &in, Array< OneD, NekDouble > &out, const AssemblyMapSharedPtr &locToGloMap, const Array< OneD, const NekDouble > &dirForcing=NullNekDouble1DArray) |

| Solve the linear system for given input and output vectors using a specified local to global map. More... | |

| virtual void | v_InitObject () |

| virtual void | v_Initialise (const boost::shared_ptr< AssemblyMap > &locToGloMap) |

| Initialise this object. More... | |

| void | SetupTopLevel (const boost::shared_ptr< AssemblyMap > &locToGloMap) |

| Set up the storage for the Schur complement or the top level of the multi-level Schur complement. More... | |

| void | ConstructNextLevelCondensedSystem (const boost::shared_ptr< AssemblyMap > &locToGloMap) |

Protected Member Functions inherited from Nektar::MultiRegions::GlobalLinSys Protected Member Functions inherited from Nektar::MultiRegions::GlobalLinSys | |

| virtual DNekScalMatSharedPtr | v_GetBlock (unsigned int n) |

| Retrieves the block matrix from n-th expansion using the matrix key provided by the m_linSysKey. More... | |

| virtual DNekScalBlkMatSharedPtr | v_GetStaticCondBlock (unsigned int n) |

| Retrieves a the static condensation block matrices from n-th expansion using the matrix key provided by the m_linSysKey. More... | |

| virtual void | v_DropStaticCondBlock (unsigned int n) |

| Releases the static condensation block matrices from NekManager of n-th expansion using the matrix key provided by the m_linSysKey. More... | |

| PreconditionerSharedPtr | CreatePrecon (AssemblyMapSharedPtr asmMap) |

| Create a preconditioner object from the parameters defined in the supplied assembly map. More... | |

Protected Attributes | |

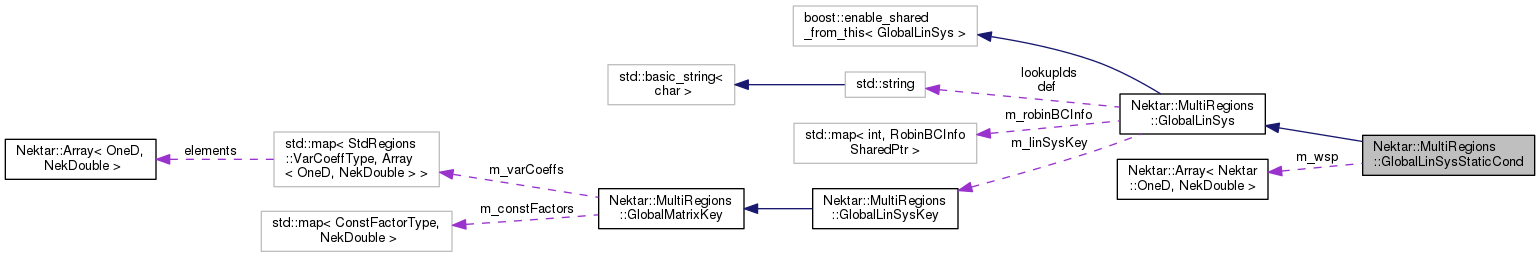

| GlobalLinSysStaticCondSharedPtr | m_recursiveSchurCompl |

| Schur complement for Direct Static Condensation. More... | |

| DNekScalBlkMatSharedPtr | m_schurCompl |

| Block Schur complement matrix. More... | |

| DNekScalBlkMatSharedPtr | m_BinvD |

Block  matrix. More... matrix. More... | |

| DNekScalBlkMatSharedPtr | m_C |

Block  matrix. More... matrix. More... | |

| DNekScalBlkMatSharedPtr | m_invD |

Block  matrix. More... matrix. More... | |

| boost::shared_ptr< AssemblyMap > | m_locToGloMap |

| Local to global map. More... | |

| Array< OneD, NekDouble > | m_wsp |

| Workspace array for matrix multiplication. More... | |

Protected Attributes inherited from Nektar::MultiRegions::GlobalLinSys Protected Attributes inherited from Nektar::MultiRegions::GlobalLinSys | |

| const GlobalLinSysKey | m_linSysKey |

| Key associated with this linear system. More... | |

| const boost::weak_ptr< ExpList > | m_expList |

| Local Matrix System. More... | |

| const std::map< int, RobinBCInfoSharedPtr > | m_robinBCInfo |

| Robin boundary info. More... | |

| bool | m_verbose |

A global linear system.

Solves a linear system using single- or multi-level static condensation.

Definition at line 55 of file GlobalLinSysStaticCond.h.

| Nektar::MultiRegions::GlobalLinSysStaticCond::GlobalLinSysStaticCond | ( | const GlobalLinSysKey & | pKey, |

| const boost::weak_ptr< ExpList > & | pExpList, | ||

| const boost::shared_ptr< AssemblyMap > & | pLocToGloMap | ||

| ) |

Constructor for full direct matrix solve.

For a matrix system of the form

![\[ \left[ \begin{array}{cc} \boldsymbol{A} & \boldsymbol{B}\\ \boldsymbol{C} & \boldsymbol{D} \end{array} \right] \left[ \begin{array}{c} \boldsymbol{x_1}\\ \boldsymbol{x_2} \end{array}\right] = \left[ \begin{array}{c} \boldsymbol{y_1}\\ \boldsymbol{y_2} \end{array}\right], \]](form_442.png)

where  and

and  are invertible, store and assemble a static condensation system, according to a given local to global mapping. #m_linSys is constructed by AssembleSchurComplement().

are invertible, store and assemble a static condensation system, according to a given local to global mapping. #m_linSys is constructed by AssembleSchurComplement().

| mKey | Associated matrix key. |

| pLocMatSys | LocalMatrixSystem |

| locToGloMap | Local to global mapping. |

Definition at line 76 of file GlobalLinSysStaticCond.cpp.

|

virtual |

Definition at line 97 of file GlobalLinSysStaticCond.cpp.

|

protected |

Definition at line 346 of file GlobalLinSysStaticCond.cpp.

References Nektar::MemoryManager< DataType >::AllocateSharedPtr(), ASSERTL0, Nektar::eDIAGONAL, Nektar::eFULL, Nektar::StdRegions::eHelmholtz, Nektar::StdRegions::eLaplacian, Nektar::StdRegions::eMass, Nektar::eSYMMETRIC, Nektar::eWrapper, Nektar::MultiRegions::GlobalMatrixKey::GetMatrixType(), Nektar::MultiRegions::GlobalLinSys::m_expList, Nektar::MultiRegions::GlobalLinSys::m_linSysKey, m_recursiveSchurCompl, m_schurCompl, sign, and v_Recurse().

Referenced by v_Initialise().

|

protected |

Set up the storage for the Schur complement or the top level of the multi-level Schur complement.

For the first level in multi-level static condensation, or the only level in the case of single-level static condensation, allocate the condensed matrices and populate them with the local matrices retrieved from the expansion list.

Definition at line 299 of file GlobalLinSysStaticCond.cpp.

References Nektar::MemoryManager< DataType >::AllocateSharedPtr(), Nektar::eDIAGONAL, Nektar::StdRegions::eHybridDGHelmBndLam, Nektar::MultiRegions::GlobalMatrixKey::GetMatrixType(), m_BinvD, m_C, Nektar::MultiRegions::GlobalLinSys::m_expList, m_invD, Nektar::MultiRegions::GlobalLinSys::m_linSysKey, m_schurCompl, Nektar::MultiRegions::GlobalLinSys::v_GetBlock(), and Nektar::MultiRegions::GlobalLinSys::v_GetStaticCondBlock().

Referenced by Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_InitObject(), v_InitObject(), and Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_InitObject().

|

inlineprotectedvirtual |

Reimplemented in Nektar::MultiRegions::GlobalLinSysIterativeStaticCond, Nektar::MultiRegions::GlobalLinSysXxtStaticCond, Nektar::MultiRegions::GlobalLinSysPETScStaticCond, and Nektar::MultiRegions::GlobalLinSysDirectStaticCond.

Definition at line 87 of file GlobalLinSysStaticCond.h.

Referenced by v_Initialise().

|

inlineprotectedvirtual |

Reimplemented in Nektar::MultiRegions::GlobalLinSysIterativeStaticCond, and Nektar::MultiRegions::GlobalLinSysPETScStaticCond.

Definition at line 81 of file GlobalLinSysStaticCond.h.

Referenced by v_Solve().

|

inlineprotectedvirtual |

Reimplemented in Nektar::MultiRegions::GlobalLinSysIterativeStaticCond, and Nektar::MultiRegions::GlobalLinSysPETScStaticCond.

Definition at line 74 of file GlobalLinSysStaticCond.h.

Referenced by v_Solve().

|

protectedvirtual |

Get the number of blocks in this system.

At the top level this corresponds to the number of elements in the expansion list.

Reimplemented from Nektar::MultiRegions::GlobalLinSys.

Definition at line 287 of file GlobalLinSysStaticCond.cpp.

References m_schurCompl.

|

protectedvirtual |

Initialise this object.

If at the last level of recursion (or the only level in the case of single-level static condensation), assemble the Schur complement. For other levels, in the case of multi-level static condensation, the next level of the condensed system is computed.

| pLocToGloMap | Local to global mapping. |

Reimplemented from Nektar::MultiRegions::GlobalLinSys.

Definition at line 265 of file GlobalLinSysStaticCond.cpp.

References ConstructNextLevelCondensedSystem(), m_locToGloMap, m_wsp, and v_AssembleSchurComplement().

|

protectedvirtual |

Reimplemented from Nektar::MultiRegions::GlobalLinSys.

Reimplemented in Nektar::MultiRegions::GlobalLinSysIterativeStaticCond, and Nektar::MultiRegions::GlobalLinSysPETScStaticCond.

Definition at line 85 of file GlobalLinSysStaticCond.cpp.

References Nektar::MultiRegions::GlobalLinSys::Initialise(), m_locToGloMap, and SetupTopLevel().

|

inlineprotectedvirtual |

Reimplemented in Nektar::MultiRegions::GlobalLinSysIterativeStaticCond, and Nektar::MultiRegions::GlobalLinSysPETScStaticCond.

Definition at line 67 of file GlobalLinSysStaticCond.h.

References m_schurCompl.

Referenced by v_Solve().

|

protectedpure virtual |

|

protectedvirtual |

Solve the linear system for given input and output vectors using a specified local to global map.

Implements Nektar::MultiRegions::GlobalLinSys.

Definition at line 106 of file GlobalLinSysStaticCond.cpp.

References Nektar::DiagonalBlockFullScalMatrixMultiply(), Nektar::eWrapper, Vmath::Fill(), Nektar::NekVector< DataType >::GetPtr(), m_BinvD, m_C, m_invD, m_recursiveSchurCompl, m_wsp, Nektar::Multiply(), Nektar::MultiRegions::GlobalLinSys::SolveLinearSystem(), Nektar::Subtract(), v_BasisInvTransform(), v_BasisTransform(), v_PreSolve(), Vmath::Vadd(), Vmath::Vcopy(), and Vmath::Vsub().

|

protected |

Block  matrix.

matrix.

Definition at line 109 of file GlobalLinSysStaticCond.h.

Referenced by Nektar::MultiRegions::GlobalLinSysDirectStaticCond::GlobalLinSysDirectStaticCond(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::GlobalLinSysIterativeStaticCond(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::GlobalLinSysPETScStaticCond(), Nektar::MultiRegions::GlobalLinSysXxtStaticCond::GlobalLinSysXxtStaticCond(), SetupTopLevel(), Nektar::MultiRegions::GlobalLinSysDirectStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), and v_Solve().

|

protected |

Block  matrix.

matrix.

Definition at line 111 of file GlobalLinSysStaticCond.h.

Referenced by Nektar::MultiRegions::GlobalLinSysDirectStaticCond::GlobalLinSysDirectStaticCond(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::GlobalLinSysIterativeStaticCond(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::GlobalLinSysPETScStaticCond(), Nektar::MultiRegions::GlobalLinSysXxtStaticCond::GlobalLinSysXxtStaticCond(), SetupTopLevel(), Nektar::MultiRegions::GlobalLinSysDirectStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), and v_Solve().

|

protected |

Block  matrix.

matrix.

Definition at line 113 of file GlobalLinSysStaticCond.h.

Referenced by Nektar::MultiRegions::GlobalLinSysDirectStaticCond::GlobalLinSysDirectStaticCond(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::GlobalLinSysIterativeStaticCond(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::GlobalLinSysPETScStaticCond(), Nektar::MultiRegions::GlobalLinSysXxtStaticCond::GlobalLinSysXxtStaticCond(), SetupTopLevel(), Nektar::MultiRegions::GlobalLinSysDirectStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), and v_Solve().

|

protected |

Local to global map.

Definition at line 115 of file GlobalLinSysStaticCond.h.

Referenced by Nektar::MultiRegions::GlobalLinSysXxtStaticCond::GlobalLinSysXxtStaticCond(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_DoMatrixMultiply(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_DoMatrixMultiply(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_GetStaticCondBlock(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_GetStaticCondBlock(), v_Initialise(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_InitObject(), v_InitObject(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_InitObject(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_PreSolve(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_PreSolve(), and Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_UniqueMap().

|

protected |

Schur complement for Direct Static Condensation.

Definition at line 105 of file GlobalLinSysStaticCond.h.

Referenced by ConstructNextLevelCondensedSystem(), and v_Solve().

|

protected |

Block Schur complement matrix.

Definition at line 107 of file GlobalLinSysStaticCond.h.

Referenced by ConstructNextLevelCondensedSystem(), Nektar::MultiRegions::GlobalLinSysDirectStaticCond::GlobalLinSysDirectStaticCond(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::GlobalLinSysIterativeStaticCond(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::GlobalLinSysPETScStaticCond(), Nektar::MultiRegions::GlobalLinSysXxtStaticCond::GlobalLinSysXxtStaticCond(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::PrepareLocalSchurComplement(), SetupTopLevel(), Nektar::MultiRegions::GlobalLinSysDirectStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysXxtStaticCond::v_AssembleSchurComplement(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_AssembleSchurComplement(), v_GetNumBlocks(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_GetStaticCondBlock(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_GetStaticCondBlock(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_InitObject(), Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_InitObject(), v_PreSolve(), Nektar::MultiRegions::GlobalLinSysPETScStaticCond::v_PreSolve(), and Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_PreSolve().

Workspace array for matrix multiplication.

Definition at line 117 of file GlobalLinSysStaticCond.h.

Referenced by Nektar::MultiRegions::GlobalLinSysIterativeStaticCond::v_DoMatrixMultiply(), v_Initialise(), and v_Solve().

1.8.8

1.8.8